Blog

#24: LLM Council and The Future of College Admissions

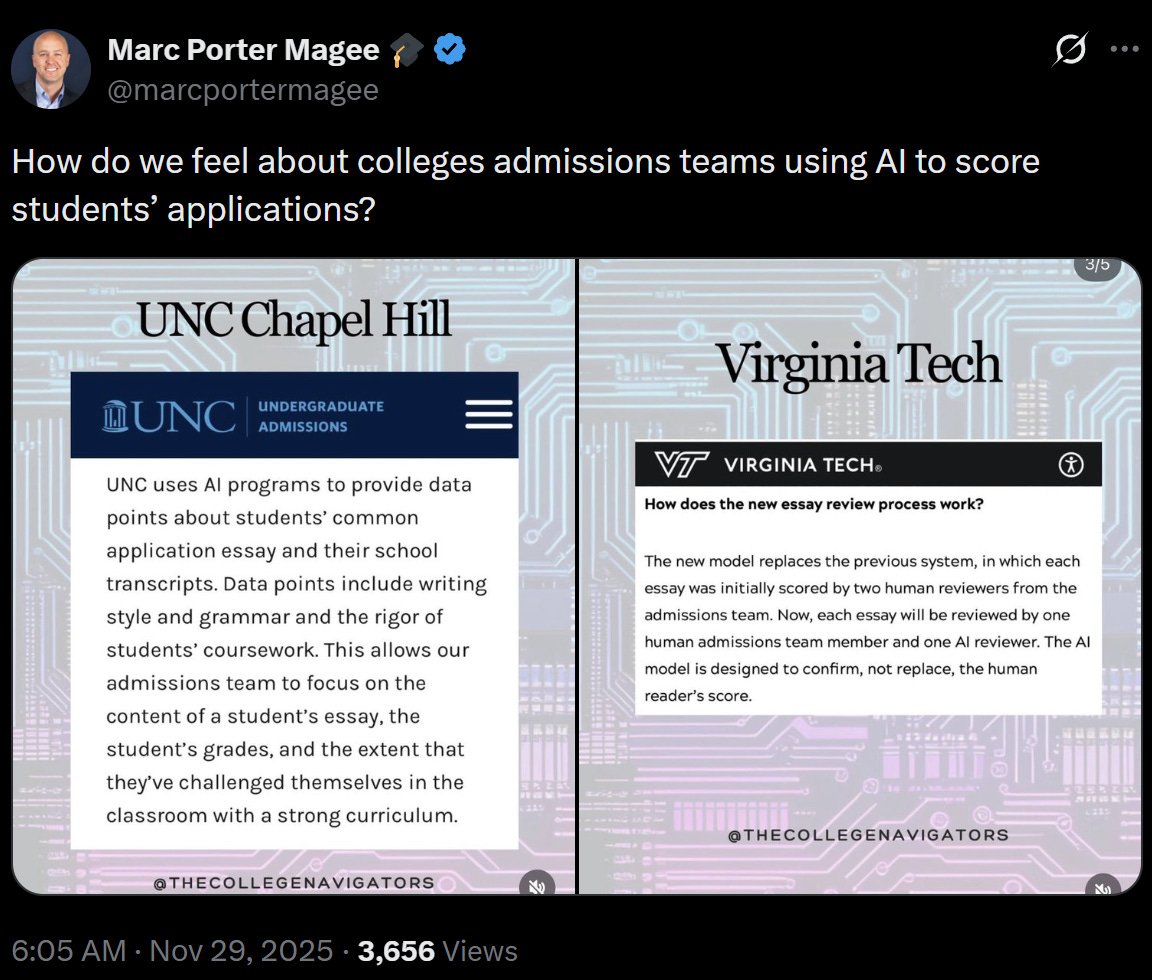

Using AI to Review College Applications

11/30/2025

#23: Why AI Needs Crash Test Dummies

Lessons from 50 Years of Car Safety

11/16/2025

#22: Are Browser Agents a Security Wild Card?

The next evolution in how we browse.

10/27/2025

#21: What the AI Industry Can Learn from the Automotive Industry

Last time, we discussed: What if Waymo worked like ChatGPT? We contrasted how self-driving vehicles are built (slow, safety-first) with how LLMs have grown (fast, scale-first).

10/12/2025

#20: What If Waymo Worked Like ChatGPT? Would We All Be Safe?

Last month, Waymo released its latest safety impact report and shared that it has completed 96 million driverless miles. The report revealed something remarkable: Waymo’s vehicles are 91% less likely to be involved in crashes resulting in serious injury

10/6/2025

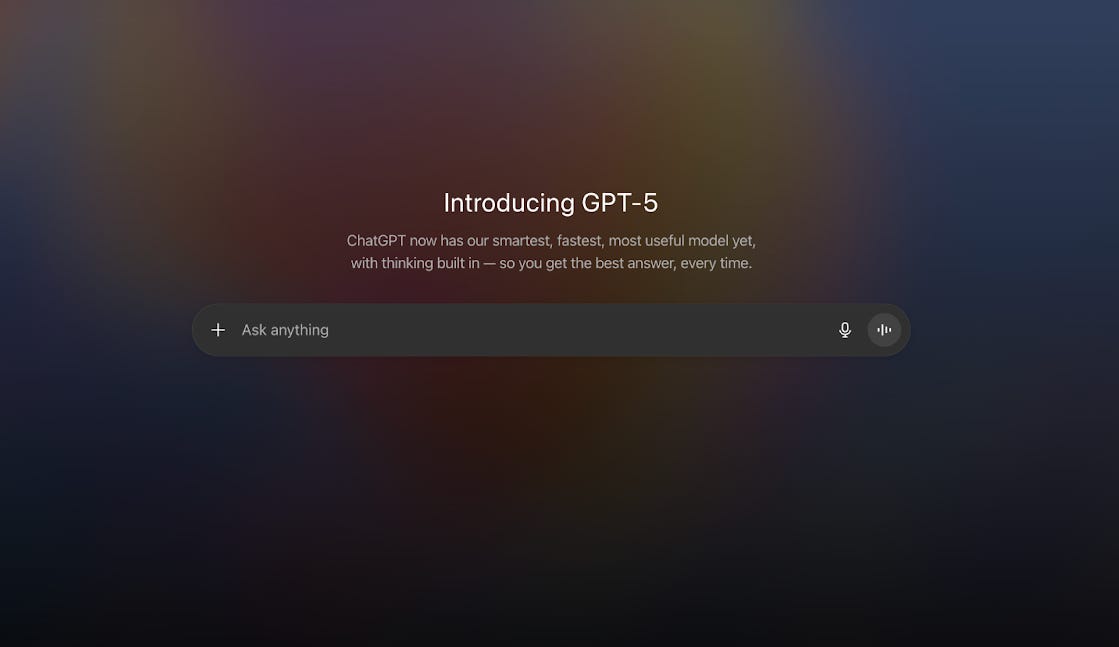

#19: GPT-5

When OpenAI rolled out GPT-5 yesterday, the company described it as a big leap in capability and safety.

8/9/2025

#18: Wikipedia, Propaganda, and the Hidden Risks for AI

It’s easy to forget that Wikipedia is one of the most powerful sources of information on the internet: it is the most cited source online and one of the most trusted.

7/8/2025

#17: Red Teaming: Why Breaking LLMs Might Save Them

In earlier posts we looked at how LLMs are given direction through supervised fine-tuning / reinforcement learning from human feedback (RLHF) and how LLMs are kept safe. But just because a model can follow instructions doesn’t mean it should follow all of them.

6/22/2025

#16: Understanding How Safety is Built into LLMs

In my previous post, we explored how Large Language Models (LLMs) are trained to align with human preferences. Now, let's examine the broader landscape of LLM safety. As these models become increasingly integrated into our daily lives, ensuring their safe and ethical operation is critical.

6/2/2025

#15: Teaching LLMs to Align with Us: Supervised Fine-Tuning and RLHF

We’ve seen how large language models (LLMs) are pretrained on vast amounts of data and how they generate text one token at a time during inference.

5/25/2025

#14: How LLMs Generate Text: A Peek Inside the Inference Process

In the last few posts, we walked through how large language models (LLMs) are built — from collecting training data to breaking down words into tokens, and finally training the model on massive datasets. Today, we will discuss the inference process, which is the reason LLMs exist.

5/17/2025

#13: How LLMs Learn: A Beginner’s Guide to Training

In our last few posts, we explored what large language models (LLMs) are, how their data is collected and curated, and how that data is broken down into tokens. Now comes the question at the heart of it all: How do these models actually learn?

4/26/2025

#12: Understanding Tokenization in LLMs

In earlier posts, we explored how large language models (LLMs) work at a high level, followed by a deeper dive into how data is collected for training these models.

4/6/2025

#11: How Community Notes Work and Is it Working?

Taking a break from the LLM posts, this week I want to discuss Community Notes as pioneered by X/Twitter a few years ago.

3/23/2025

#10: Data Collection and Curation for LLM Training

In my last post, I discussed how LLMs work at a high level.

3/16/2025

#9: How LLMs Work: A Beginner’s Guide

I am sure that most of you reading this blog are aware of Large Language Models (LLMs) like ChatGPT, Claude, and DeepSeek.

3/2/2025

#8: Behind the Scenes: Amazon's OAK4 Fulfillment Center

Every second, Amazon processes over 100 orders.

2/15/2025

#7: Carl Zeiss: The Hidden Optics Powerhouse Behind Every Advanced Chip

In a previous article, I discussed ASML and its dominance in the production of Extreme Ultraviolet (EUV) technology for chip fabrication.

2/10/2025

#6: Canon Tokki: The Unsung Hero Behind our Gadgets

When you think of cutting-edge display technology, names like Samsung, LG, and Apple might come to mind.

1/25/2025

#5: ASML: The Technology Powering the Modern Digital Era

Did you know that nearly all the chips that are crucial to modern technology are printed by just a singular company?

1/12/2025